Training models is the new creative process

By Jason Rosenberg, Creative Director

What’s inside

Why we turned our company swag into a Brand Style Solution

Making the dataset

Training the model

The challenge of matching the model to the product

The moment it finally clicked and what it means for the future of brand creativity

Introduction: The AI reality check

Anyone working in a creative industry right now feels it.

AI is hard.

You wouldn’t know it from my LinkedIn feed, though. Every week there’s another wave of launches, each one promising to reinvent creative production. A constant parade of flawless, cherry-picked launches that make it seem like it’s all possible. But it’s an illusion.

The frustration comes when you try it yourself. The outputs never look the same, the workflow falls apart, and you realize those results were built under perfect conditions you’ll never have. Because, you’re not the expert on what makes that one model sing, and no single tool can do it all.

There is no god model.

By the time most brands come to us, they’ve already been through the cycle. They’ve tested the new tools, maybe even one of those “train your own model” platforms that promise instant brand perfection, and ended up realizing the same thing: it’s not there yet. And the problem isn’t that these tools don’t work, it’s that they’re not built for the nuances of your brand.

“Out-of-the-box AI gives you in-the-same-box-as-everyone-else AI.”

That's why we built Stable Socks, a completely made-up brand inspired by our company swag. We wanted to show what really happens when you build an actual custom model around a brand. We do this for customers constantly. At Stability AI we call it the Brand Style Solution. But this time we needed to do it for ourselves. Something fully ours. So we took our socks, built a brand identity around them, and trained a model that could generate endless campaign visuals.

The catch? The process wasn't magic. It was a creative grind. I stepped into our customers' shoes as the creative lead, working hand-in-hand with our incredible applied research team, Dennis Niedworok and Katie May, through seven meticulous iterations of the model.

We refined, we retrained, and we pushed until it finally clicked.

And that’s when it felt like magic.

This is the real story of how we got there.

➊ The product and dataset

I’ve started thinking about custom models like baking. You can have a perfect recipe, but with poor ingredients, you’ll never get the result you’re hoping for. For AI, the ingredients are your data. And curating that data is a skill in itself.

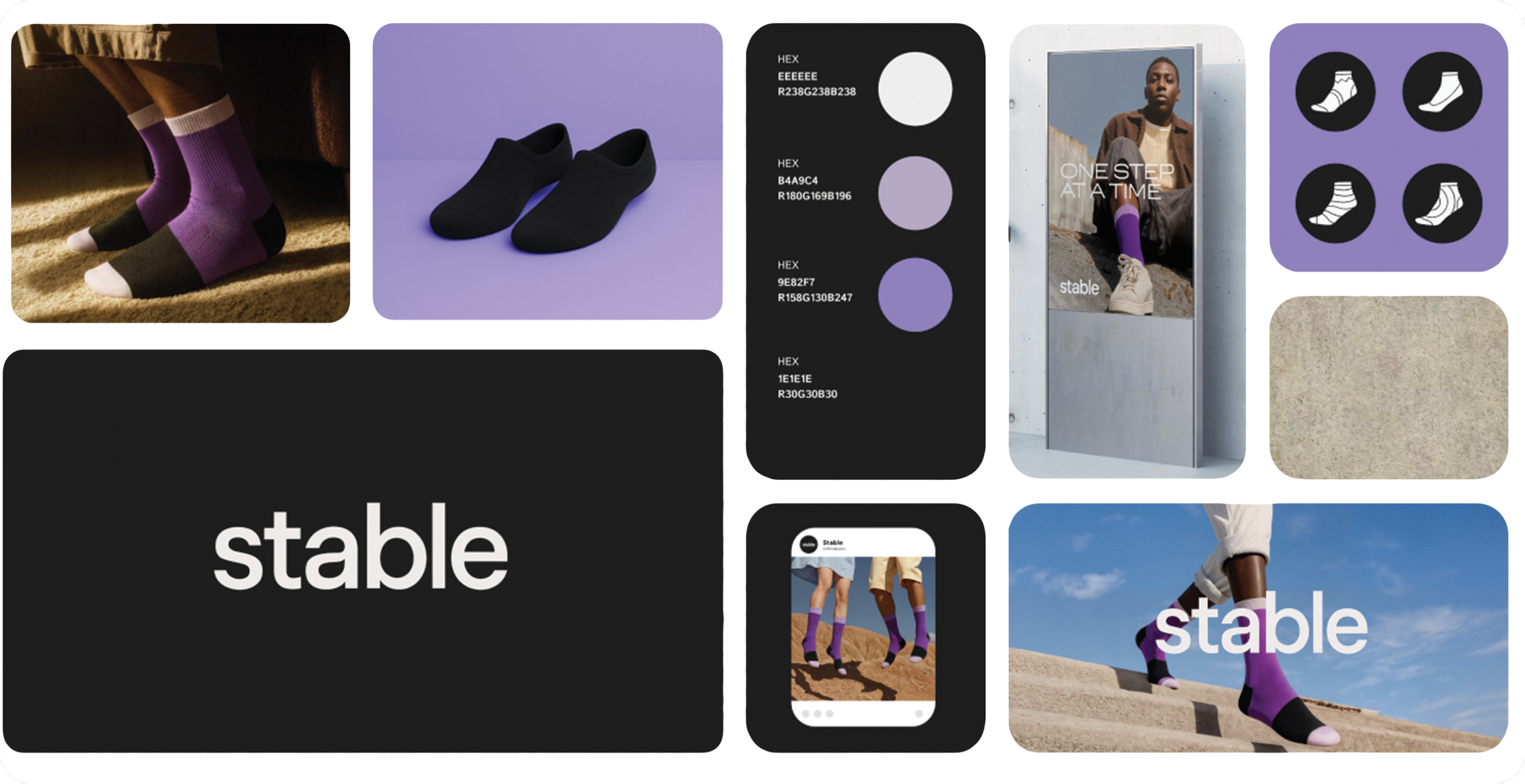

Working with a great designer, we started by building the Stable Socks brand. This was the fun part. What did the product actually look like? What were the colors, textures, and cut of the sock? What kind of campaign visuals fit the brand? Were we seeing lifestyle photography or surreal floating product shots?

As we built out the brand, we answered those questions one by one. The result was a clear visual identity that became the foundation for everything that followed. Here’s an overview of the brand.

The Brand:

Style: Lifestyle photography with unexpected poses for a sock brand.

Palette: Product built from Stability AI's colors. Complimented with warm earth tones.

Aesthetic: Natural light, visual grain, desaturated, lower contrast.

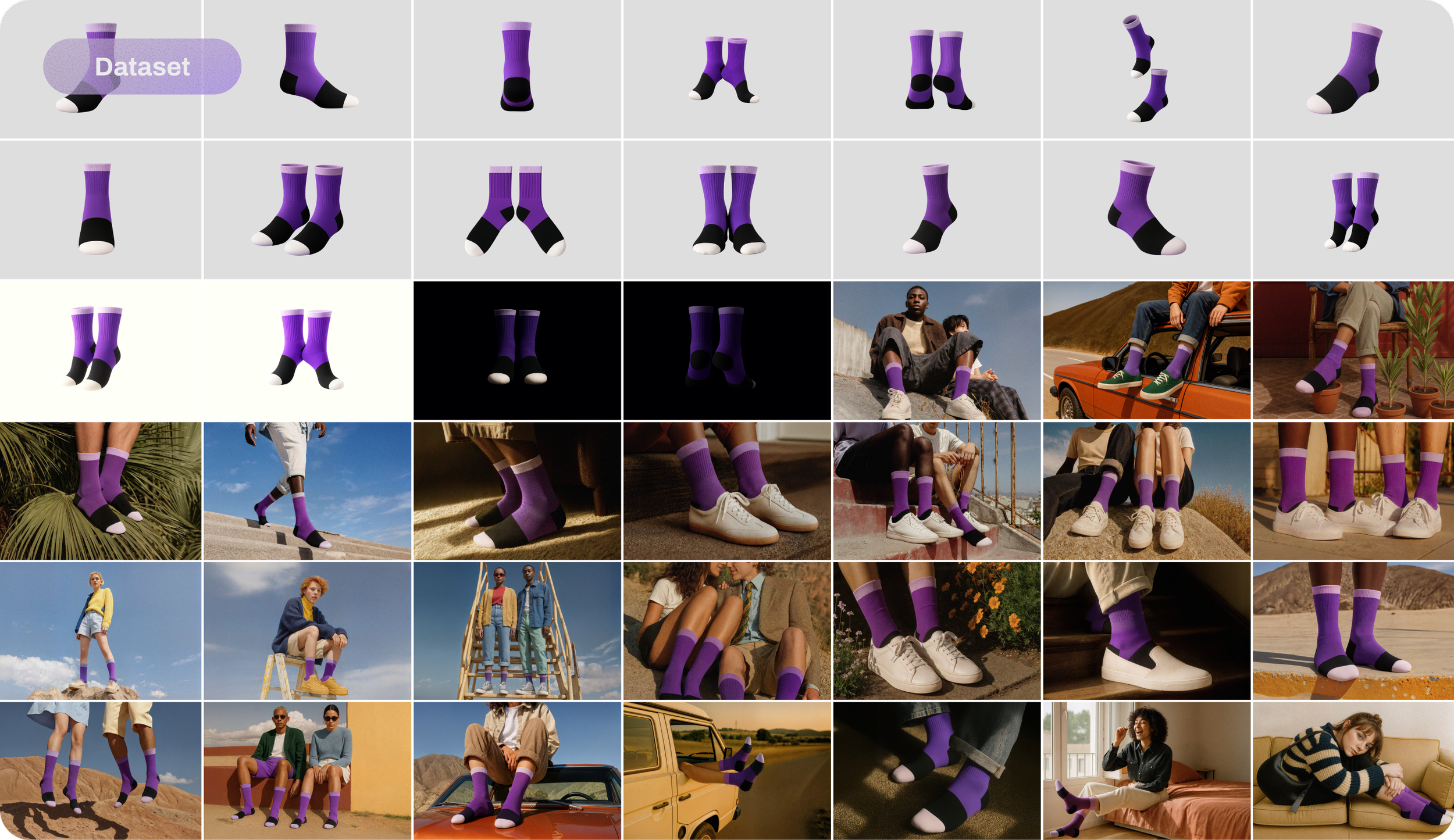

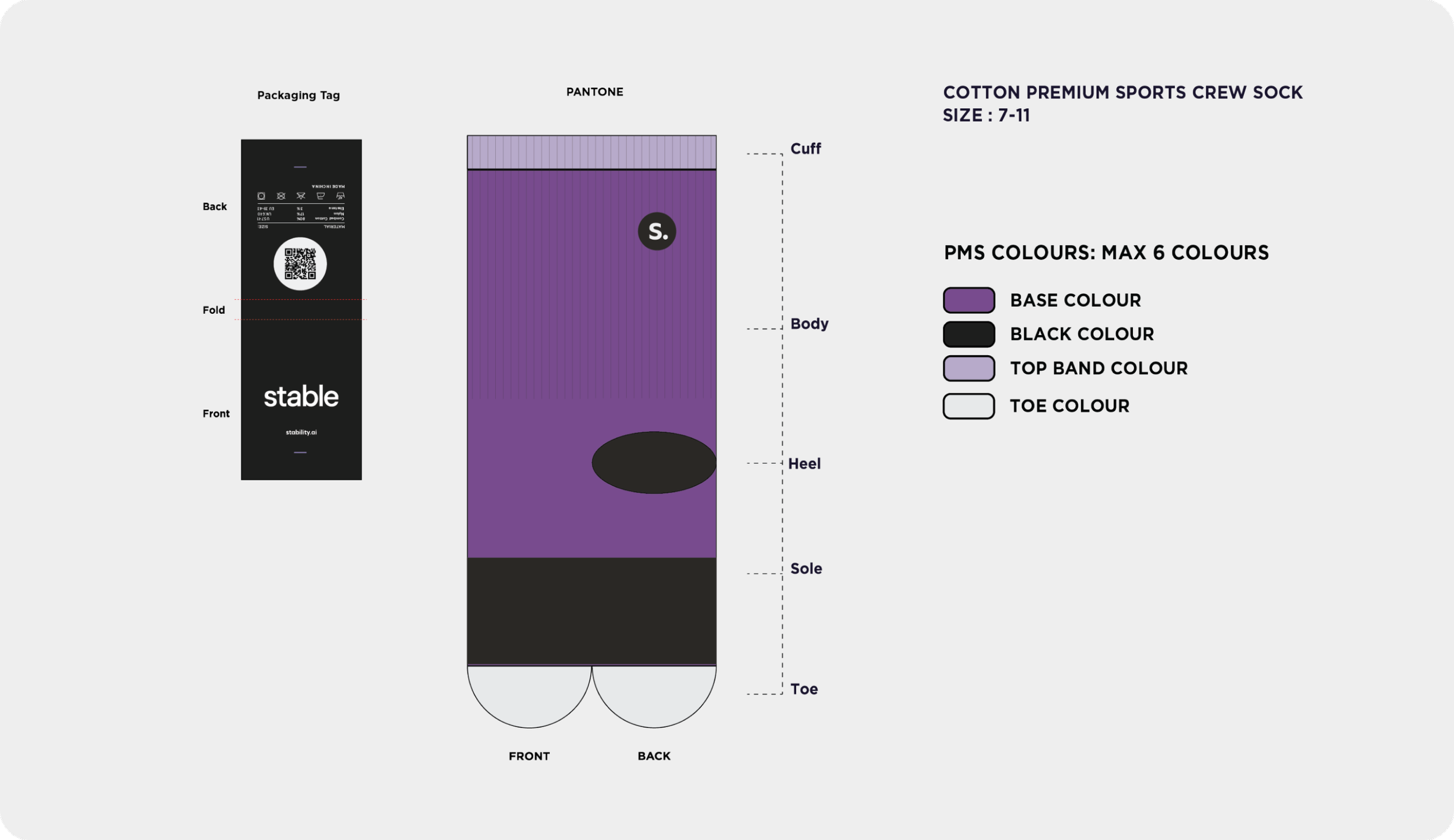

For the dataset, we focused on a collection of images that captured the product from different angles, in different lighting, and in poses that reflected all the brand attributes we had defined.

Because the product didn’t exist yet, our dataset had to be completely synthetic. That was the challenge. Using every tool available, along with plenty of manual Photoshop work, we built a set of more than 30 images that represented the product exactly the way we imagined it.

A synthetic dataset like this isn’t just useful for projects like ours. It can also help brands who are in the product development stage, giving creative teams a way to build visual assets in parallel with the product. Most brands, of course, already have a head start with years of photography and branded visuals ready to train on. We were starting from zero, which made this a true test of how powerful the process can be even without a finished product.

Comparing this dataset to the sock design sent to the vendor.

➋ Training the model

Model 1: Calibrating the vision

The first version of the model was nowhere close to where we ended up. This is where the creative process really begins.

Let's take a look at it.

So what am I seeing here? The below served as topics for discussion that create a back-and-forth between myself and the applied research team.

My initial critique

Product inconsistency: The product must contain 100% accuracy. The product can’t change from one image to the next.

That AI look: Overly smooth skin textures and faces that felt synthetic.

Oversaturated colors: Colors are cooked, too saturated, contrasty, this isn’t our brand.

The issues with the first version were obvious. It was a great tool to align on our north star, but we didn't spend too much time testing it.

Model 2 and 3: Closing the gap

Models two and three happened quickly, and the feedback was nearly identical between them.

What was improving

The warm, earthy color palette was emerging.

Textures in clothing were getting better.

The sock was becoming more consistent (but still not perfect).

What could be better

Low prompt adherence

Textures are too smooth

Claw-shaped toes feel unrealistic

Faces are too AI

Contrast and saturation too strong

Lack of pose variety

Model 4: Where craft takes over

By now, we’d talked through every nuance of the Stable Socks brand. And, we’d come to a conclusion: while most of the issues could be solved with the model, the real breakthrough happens in the workflow that surrounds it.

It’s worth calling out here: the goal wasn’t just to train a model, it was to build a workflow.

“Workflows allow the model to work alongside a set of controls to create final, brand-right outputs.”

Behind the scenes, that can mean adjusting color correction, model strength, seed randomization, negative prompts, and dozens of quiet decisions running under the hood.

What I see as the brand leader is a clean, purpose-built UI. But behind that simplicity lives a workflow sculpted by our research team. If you want to go deeper into how that comes together, Alex Gnibus, PPM at Stability AI, covers it brilliantly in this article about our Brand Style Solution.

The workflow creation phase is where the process starts to feel familiar again. Talking through this stage feels like sitting beside a retoucher, describing tone, warmth, and mood until it lands. Less cyan in the skin tone. Bring down the saturation. Warm up the whites. Add grain to the texture.

In my opinion, a lot of the intangibles of the brand came together at this moment. A post-processing stage that combined color correction with tactile visual grain suddenly brought it all together. The images finally carried the mood and texture we had been chasing from the start.

Color correct:

Contrast: -20

Saturation: -10

Gamma: 1.0

Grain:

Grain power: 0.20

Grain scale: 1.0

Grain saturation: 1.0

You can see how valuable this post processing layer is when you compare an output with and without it.

The verdict:

Post-Processing: A resounding success. The mood and texture were finally right.

Prompt Adherence: Better, but not perfect.

Product Consistency: Improved, but still an occasional struggle.

When you put it all together, this is what model 4 gave us.

We were getting closer. With each model the outputs are noticeably better.

➌ When reality doesn't match the model

Just as we were reaching the finish line, the vendor sent us the actual socks.

It was now time for the real test, and answer the question if the model matches the product?

We weren’t exactly confident. The vendor had already warned us they couldn’t match the yarn color exactly, and a few design tweaks had slipped in along the way.

This is the moment every brand eventually runs into. You realize the model and the product aren’t perfectly in sync. You can’t change the product, but you can change the model. So it becomes a back-and-forth, this constant dance of trying to close the gap between what’s real and what’s generated.

That’s the real game here: consistency.

“Teaching the model to see the product the way you do.

Not ‘close enough,’ but right.”

So we went back under the hood, refined the dataset, and kept pushing until the generated socks felt as real as the pair sitting on my desk.

➍ Finalizing the model

The next model I tested was the one. Technically, it was Model 7.

This part of the project mirrored what our customers experience when they build their own Brand Style models. I was stepping into their role as the brand leader, guiding the look and feel, while our research team refined the mechanics behind the scenes. When I asked Dennis and Katie what changed between Models 5 and 6, they explained that they manually adjusted captions and cleaned up the dataset to eliminate anything that was throwing off consistency.

As Dennis put it, “The hand-made captions helped align the product and keep it close to 100% accurate, avoiding context bleeding and making sure the sock is the main concept.”

That part alone could fill an entire blog post.

Then came Model 7.

And this was the moment when the promise of AI came back to me. The results had the tone, texture, and warmth we imagined from the start. It looked and felt like the brand we created, not a simulation of it.

It wasn’t magic. It was weeks of testing, feedback, and collaboration.

“Every round brought us closer until the model understood the brand as clearly as we did.”

Seeing it finally come to life was the same satisfaction you get when a creative vision finally clicks.

So, here it is. The final model.

Final thoughts

After weeks of testing, tweaking, and questioning everything, the biggest surprise was how human the process felt. The back and forth, the small creative decisions, the “this just doesn’t feel right yet” moments. It was the same rhythm every creative knows.

Training a custom model didn’t change that. It is just another form of the creative process.

When the model finally started performing the way we imagined, it stopped being about socks and started being about possibility. What happens when a creative team has a model that truly understands their brand? You stop thinking about what’s feasible and start imagining what’s possible.

That’s the shift. We’re no longer limited by budgets, timelines, or logistics. Suddenly, we can create visuals that would have been out of bounds before. It’s all possible. A horse in your shoot, take the camera underwater, your talent floating in the sky. Now, the world expands as fast as the idea does. And, the best part, you’re not replacing the creative process. You’re scaling it. You’re expanding what’s possible without losing what makes it yours.

If we can train a model to capture the tone, texture, and personality of a pair of socks, imagine what’s possible for a brand built to stand for something bigger.

If you want to see the final model in action, check out the demo.

Or if you're ready to talk about building something custom for your brand, let's talk.