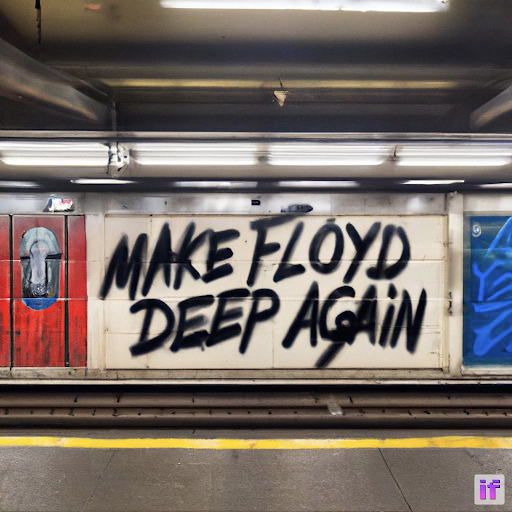

Stability AI releases DeepFloyd IF, a powerful text-to-image model that can smartly integrate text into images

Today Stability AI and its multimodal AI research lab DeepFloyd announced the research release of DeepFloyd IF, a powerful text-to-image cascaded pixel diffusion model.

DeepFloyd IF is a state-of-the-art text-to-image model released on a non-commercial, research-permissible license that allows research labs to examine and experiment with advanced text-to-image generation approaches. In line with other Stability AI models, Stability AI intends to release a DeepFloyd IF model fully open source at a future date.

Description and Features

Deep text prompt understanding:

The generation pipeline utilizes the large language model T5-XXL-1.1 as a text encoder. Many text-image cross-attention layers also provide better prompt and image alliance.Application of text description into images:

Incorporating the intelligence of the T5 model, DeepFloyd IF generates coherent and clear text alongside objects of different properties appearing in various spatial relations. Until now, these use cases have been challenging for most text-to-image models.A high degree of photorealism:

This property is reflected by the impressive zero-shot FID score of 6.66 on the COCO dataset (FID is a main metric used to evaluate the performance of text-to-image models; the lower the score, the better).Aspect ratio shift:

The ability to generate images with a non-standard aspect ratio, vertical or horizontal, and the standard square aspect.Zero-shot image-to-image translations:

Image modification is conducted by (1) resizing the original image to 64 pixels, (2) adding noise through forward diffusion, and (3) using backward diffusion with a new prompt to denoise the image (in inpainting mode, the process happens in the local zone of the image). The style can be changed further through super-resolution modules via a prompt text description. This approach allows modifying style, patterns and details in output while maintaining the basic form of the source image – all without the need for fine-tuning.

Prompt examples

DeepFloyd IF can create different fusion concepts using prompts to arrange texts, styles and spatial relations to suit users’ needs.

Definitions and processes

DeepFloyd IF is a modular, cascaded, pixel diffusion model. We break down the definitions of each of these descriptors here:

Modular:

DeepFloyd IF consists of several neural modules (neural networks that can solve independent tasks, like generating images from text prompts and upscaling) whose interactions in one architecture create synergy.Cascaded:

DeepFloyd IF models high-resolution data in a cascading manner, using a series of individually trained models at different resolutions. The process starts with a base model that generates unique low-resolution samples (a ‘player’), then upsampled by successive super-resolution models (‘amplifiers’) to produce high-resolution images.Diffusion:

DeepFloyd IF’s base and super-resolution models are diffusion models, where a Markov chain of steps is used to inject random noise into data before the process is reversed to generate new data samples from the noise.Pixel:

DeepFloyd IF works in pixel space. Unlike latent diffusion models (like Stable Diffusion), diffusion is implemented on a pixel level, where latent representations are used.

This generation flowchart represents a three-stage performance:

A text prompt is passed through the frozen T5-XXL language model to convert it into a qualitative text representation.

Stage 1: A base diffusion model transforms the qualitative text into a 64x64 image. This process is as magical as witnessing a vinyl record’s grooves turn into music. The DeepFloyd team has trained three versions of the base model, each with different parameters: IF-I 400M, IF-I 900M and IF-I 4.3B.

Stage 2: To ‘amplify’ the image, two text-conditional super-resolution models (Efficient U-Net) are applied to the output of the base model. The first of these upscale the 64x64 image to a 256x256 image. Again, several versions of this model are available: IF-II 400M and IF-II 1.2B.

Stage 3: The second super-resolution diffusion model is applied to produce a vivid 1024x1024 image. The final third stage model IF-III has 700M parameters. Note: We have not released this third-stage model yet; however, the modular character of the IF model allows us to use other upscale models – like the Stable Diffusion x4 Upscaler – in the third stage.

Dataset training

DeepFloyd IF was trained on a custom high-quality LAION-A dataset that contains 1B (image, text) pairs. LAION-A is an aesthetic subset of the English part of the LAION-5B dataset and was obtained after deduplication based on similarity hashing, extra cleaning, and other modifications to the original dataset. DeepFloyd’s custom filters removed watermarked, NSFW and other inappropriate content.

License

We are releasing DeepFloyd IF under a research license as a new model. Incorporating feedback, we intend to move to a permissive license release; please send feedback to deepfloyd@stability.ai. We believe that the research on DeepFloyd IF can lead to the development of novel applications across various domains, including art, design, storytelling, virtual reality, accessibility, and more. By unlocking the full potential of this state-of-the-art text-to-image model, researchers can create innovative solutions that benefit a wide range of users and industries.

As a source of inspiration for potential research, we pose several questions divided into three groups: technical, academic and ethical.

1. Technical research questions:

a) How can users optimize the IF model by identifying potential improvements that can enhance its performance, scalability, and efficiency?

b) How can output quality be improved by better sampling, guiding, or fine-tuning the DeepFloyd IF mode?

c) How can users apply certain techniques to modify Stable Diffusion output, such as DreamBooth, ControlNet and LoRA on DeepFloyd IF?

2. Academic research questions:

a) Exploring the role of pre-training for transfer learning: Can DeepFloyd IF solve tasks other than generative ones (e.g. semantic segmentation) by using fine-tuning (or ControlNet)?

b) Enhancing the model's control over image generation: Can researchers explore methods to provide greater control over generated images? These variables include specific visual attributes like customized image style, tailored image synthesis, or other user preferences.

c) Exploring multi-modal integration to expand the model's capabilities beyond text-to-image synthesis: What are the best ways to integrate multiple modalities, such as audio or video, with DeepFloyd IF to generate greater dynamic and context-aware visual representations?

d) Assessing the model's interpretability: To gain a clearer insight into DeepFloyd IF's inner processes, researchers can develop techniques to improve the model's interpretability, e.g. by allowing for a deeper understanding of the generated images' visual features.

3. Ethical research questions:

a) What are the biases in DeepFloyd IF, and how can we mitigate their impact? As with any AI model, DeepFloyd IF may contain biases stemming from its training data. Researchers can explore potential biases in generated images and develop methods to mitigate their impact, ensuring fairness and equity in the AI-generated content.

b) How does the model impact social media and content generation? As DeepFloyd IF can generate high-quality images from text, it is crucial to understand its implications on social media content creation. Researchers can study how the generated images impact user engagement, misinformation, and the overall quality of content on social media platforms.

c) How can researchers develop an effective fake image detector that utilizes our model? Can researchers design a DeepFloyd iF-backed detection system to identify AI-generated content intended to spread misinformation and fake news?

Access to weights can be obtained by accepting the license on the model's cards at Deep Floyd's Hugging Face space: https://huggingface.co/DeepFloyd.

If you want to know more, check the model's website: https://deepfloyd.ai/deepfloyd-if.

The model card and code are available here: https://github.com/deep-floyd/IF.

Everyone is welcome to try the Gradio demo: https://huggingface.co/spaces/DeepFloyd/IF.

Join us in public discussions: https://linktr.ee/deepfloyd.

We welcome your feedback! Please send your comments and suggestions about DeepFloyd IF to deepfloyd@stability.ai